OCI Artifacts. The story so far

Container registries are becoming the universal artifact storage. The OCI updated the Distribution (registry) and Image specifications to 1.1 in 2024 to support any file type. Here's the back story, details on the changes, and various tools that can take advantage of this registry enhancement.

👨💻 OCI Registries for Everything?

Docker didn’t just invent the modern container; they also created the artifact store for container images they called registry. Quickly, the registry code was open-sourced as "distribution" in 2014 and eventually standardized as the OCI Distribution Specification in 2018, alongside the OCI image and runtime specifications.

The Original Image Registry

We all know this as various casual terms like "Docker Registry", "OCI Registry", or just an "Image Registry." They tend to be "set and forget" file storage systems that are the bridge between source code, CI, and your servers that run containers.

You may not have thought much about the registry you’re using, but that's about to change (if it hasn't already for you).

OCI-compatible registries (in all their forms) are everywhere, and many organizations use multiple registries in the cloud, on-prem, and in their CI. No big deal; OCI registries are just a new type of artifact store for a new kind of artifact. Many of us already have one or more artifact stores in our organization. But now we have a N+1 problem where we're expected to run an artifact store for every package format we want to support.

Doh! We just broke a rule of mine: "Don't install a new solution similar to the old solution without a plan to phase out the old." - Says Me

Many of us have more of these "file and metadata" storage systems floating around. The core problem is that we were building and using artifact-specific storage systems. Sure, a few proprietary solutions run many different unrelated storage APIs on a single tool for all the various package and artifact types, but that's not ideal for us or the industry, and that's also not the "CNCF way." We need a single artifact storage standard flexible enough to be used by most of the tools we have today with artifact needs.

The OCI Distribution standard and our OCI registry products are likely a reasonable solution for more than just container images. Many tools are also starting to use OCI registries to store their non-image artifacts.

Helm was the tip of the iceberg

Likely, your first use of an OCI registry for something other than a container image was in storing Helm charts in an OCI registry repo. There was the old proprietary way that Helm had in v1 and v2 of sticking Helm charts in a web server, then starting in Helm v3.5 we were given a new storage option of using a registry via Helms's new OCI artifact support.

Many other tools also support this idea, including security tools storing SBOMs, image-signing, Kubernetes security profiles, OPA policies, and even non-container-related artifacts like WASM modules and Homebrew packages.

👉 How artifacts are changing the OCI registry

I rarely work with a team with only one container registry, much less a single artifact storage solution.

Well, I think we're on the cusp of the OCI (Docker) Registry becoming the one artifact and package storage system to rule them all... eventually.

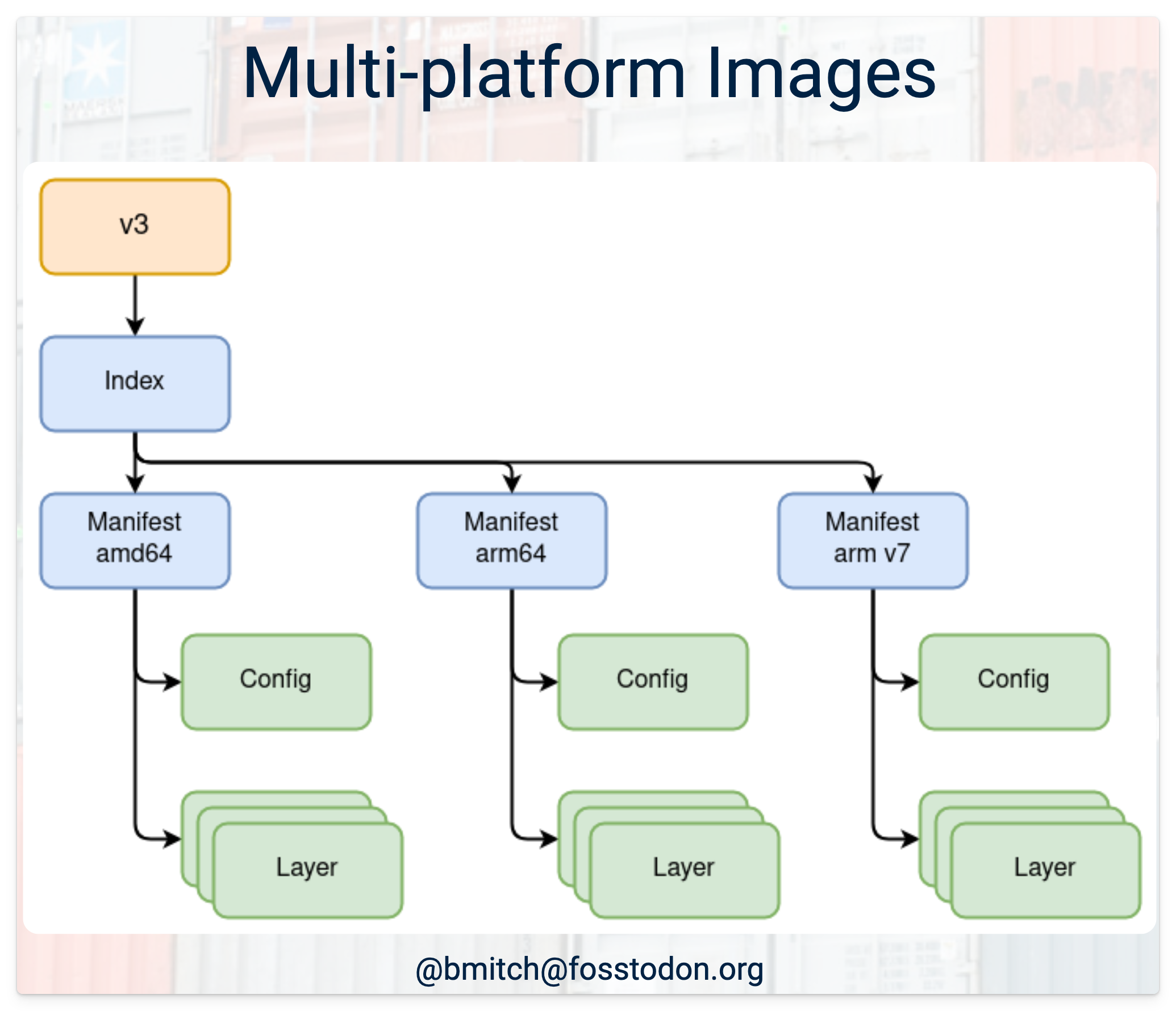

As a refresher, the OCI registry you know and love is full of two main data objects: Manifests (metadata) and layer blobs (for a container image, those are gzip tarballs). We've also got tags in the API that point to a manifest (which then may point to another set of manifests if you're building multi-arch images) and then points to one or more layers. Here's a "full-featured" example of the data relationships from Brandon Mitchell's OCI Distribution 1.1 RC talk on how registries relate data objects today.

The beginning of the OCI artifact sprawl

Helm was one of the first teams and CLIs to use registries to store non-container image data at a large scale. Helm now has OCI registry support by default in recent versions. It took advantage of the fact that the OCI distribution standard allowed for different media types in the image layers, even if it wasn't a true container image layer. Here are the Helm docs on how to use your existing registries to store charts.

As more people wanted to put more things in their registry besides containers, we started to see conference talks and official proposals about various tools moving to support "OCI artifact" storage rather than their traditional storage.

There are two prominent use cases for why this evolution of container registries is happening. 👉 The first is for storing and connecting data related to a specific image (SBOM, CVE scan, image signing). 👉 Second, are data objects semi-related (or completely unrelated) to containers (Helm, Tekton, Homebrew). They want to take advantage of the ubiquitous and content-addressable nature of OCI registries.

First use case: Image-manifest-adjacent artifacts

From SBOMs to image signing, there's a growing list of things directly related to a specific container image manifest. Primarily led by the software supply chain security movement, many artifacts now relate to an image in a registry that need to be stored somewhere for reference. Turns out, OCI registries are good places to store those too.

That's already happened, even before the OCI Distribution 1.1 spec was released in 2024. Although it's not ideal, some tools create the relationship between the image and the adjacent artifacts using various tricks like specially crafted image tags to connect the dots. Hopefully, all those tools are moving to the new OCI Artifact spec in OCI 1.1.

Docker's own image builder provides provenance attestations adjacent to the image it builds and will push those to the registry along with the image.

But, once we have all these signatures, scan reports, and SBOMs stored in the registry, how can we (more cleanly) find them with an official API to connect all the manifest pieces?

The solution to that problem is called the Referrers API, and it was released with OCI Distribution 1.1 that was released in 2024.

This had been in the works for a while, and I'll let OCI maintainer (and Docker Captain) Brandon Mitchell explain it better from a talk about the challenge of connecting all these new artifact types to an OCI image:

2023 breakdown of the future Referrers API technicals. 44 minutes

Second use case: General artifact support

As we started deploying containers en masse, we needed other files and objects semi-related to those containers. Sure, we could always use S3 or Git, but you may not have access to those from production container clusters. Helm, Tekton, seccomp/selinux/apparmor, OPA/Gatekeeper, Flux, Wasm, Compose, and Pulumi all fall into this category, and are in various levels of supporting OCI registries as a distribution model.

Then there's the wild-wild west artifacts of "anything that needs common HTTP storage for distribution." Packaged artifacts often need the kind of guarantees that OCI registries can make, such as sha hashing everything and making data universally content-addressable. The Homebrew package manager is a great example, as it switched to using the GitHub Container Registry in 2021 to serve over 50 million packages a month. Here's an example of returning some metadata about a Homebrew package image. It's not perfect (notice it says it's an image in the mediaType) but it clearly works.

{"schemaVersion": 2,

"manifests": [

{

"mediaType": "application/vnd.oci.image.manifest.v1+json",

"digest": "sha256:3b7ebf540cd60769c993131195e796e715ff4abc37bd9a467603759264360664",

"size": 1977,

"platform": {

"architecture": "amd64",

"os": "darwin",

"os.version": "macOS 13.0"

},

"annotations": {

"org.opencontainers.image.ref.name": "3.40.1.ventura",

"sh.brew.bottle.digest": "d3092d3c942b50278f82451449d2adc3d1dc1bd724e206ae49dd0def6eb6386d",

"sh.brew.tab": "{\"homebrew_version\":\"3.6.16-97-ge76c55e\",\"changed_files\":[\"lib/pkgconfig/sqlite3.pc\"],\"source_modified_time\":1672237605,\"compiler\":\"clang\",\"runtime_dependencies\":[{\"full_name\":\"readline\",\"version\":\"8.2.1\",\"declared_directly\":true}],\"arch\":\"x86_64\",\"built_on\":{\"os\":\"Macintosh\",\"os_version\":\"macOS 13.0\",\"cpu_family\":\"penryn\",\"xcode\":\"14.1\",\"clt\":\"14.1.0.0.1.1666437224\",\"preferred_perl\":\"5.30\"}}"

}

}]}Part of the Homebrew OCI manifest JSON for sqlite

Unlike my "first use case" above, these generic artifact types will likely not need the Referrers API to indicate a relationship to a container image directly, though they may find some internal benefit by using the Referrers API for other manifest-to-manifest relationships. That's yet to be seen.

But what these tools do need is a clear path for how to officially store their various artifact types in a registry. We didn't really have that before, and it always felt a bit hacky to overload the existing registry metadata objects of image and layers to store non-image and non-layer data.

As a workaround years ago, the ORAS project was created and eventually accepted by the CNCF. "OCI Registry As Storage" is both a CLI and a go library that lets you push/pull any data type you want into an existing OCI registry. It's quite popular and used in many other tools and cloud registries to store artifacts.

This idea of overloading the existing OCI Distribution 1.0 spec for "general artifact storage" had side effects. Mainly, support beyond container images doesn't work everywhere because some registries don't support various changes to the manifest. Also, many registry UIs don't know how to handle displaying these data types, often resulting in weird-looking image tags displayed, seeing unknown/unknown types, or the artifacts not being displayed in a UI at all.

The ORAS, CNCF, and OCI teams have a vision though. And this talk by Docker's Steve Lasker (who worked at Azure before Docker) is a great story of all the needs we have for artifacts and what they are doing about it. Note that some of the stuff about new features near the end of this 2022 talk is outdated in the implementation details of the OCI Distribution 1.1 release, but it's still good to see the examples.

2022 KubeCon EU overview, vision, and walkthrough of OCI artifacts. 40 minutes

In the OCI Distribution 1.1 release for the image spec and distribution spec, they added a few additional fields to the manifest to improve the drawbacks of the previous generic artifact implementation, including a artifactType that lets tool creators define their own type that should be supported by any registry that updates to OCI Distribution 1.1.

How can you take advantage of OCI artifacts now and be ready for future OCI changes?

👉 Ensure you’re ready for OCI's future

So, what should we registry users do with the changes in OCI specs? In short, nothing. All your tools should work today and will just get better with 1.1. 😅

Remember that OCI is just a specification. You fine readers don’t use OCI directly; you use a registry or CLI that adheres to the OCI Distribution specification. For years, that has held steady at a ~1.0 release. Over the years of the OCI working toward this update, there have been many meetings, working groups, and PRs. Most tools implementing OCI specs have been following those plans and working to add planned changes in preparation for a General Availability release of the specs.

Since 1.1 is a minor release, and the significant changes are all done with backward compatibility in mind, I would not expect any existing tools to break if you’re using a 1.0 tool with a 1.1 registry or vice versa.

How can I use OCI artifacts today?

Remember the “two types” of artifacts I described in last week's newsletter?

The first type, which is “image-related artifacts” (SBOMs, providence, scans, and signatures), have had an inconsistent implementation with OCI registries and will likely need an update before they will work well across all registries with proper registry-aware references (Referrers API and the artifactType metadata). These tools include Cosign, Notation, BuildKit, Trivy, Syft, Grype, etc., but the idea here is we won’t need an additional tool like the ORAS CLI to upload these artifacts in a "1.1 spec-friendly way".

I hope these tools that already support container images will get updated to package their output into an OCI Distribution 1.1 artifact and co-locate them with the image they came from for easy finding.

But the 2nd type, the “unrelated artifacts” that don’t need special registry metadata to link two artifacts together… are the ones we can use freely today! Various registries have added UI support for specific artifact types, including Docker Hub identifying a few types by name and Harbor identifying types by their brand logo.

Here is a partial list of those "2nd type" tools

In my experience, most hosted registries allow these types, but not always. YMMV.

🐳 For the pure Docker fans out there, you can…

- Run Wasm Modules on an existing

wasi/wasmruntime without needing a full container image. docker compose publish username/my-compose-appwill store Docker Compose YAML as its own OCI artifact. Then, usedocker compose -f oci://docker.io/username/my-compose-app:latest upto run that compose app without needing to clone a repo.- Push and pull Docker Volumes with the Docker Desktop extension or my simple shell script.

⎈ For the Kubernetes clusters and workloads, you can…

- Store Helm Charts in an OCI registry directly from the helm CLI.

- Deploy Gatekeeper, Kyverno, and Kubewarden policies to Kubernetes via OCI registries.

- Use the Kubernetes Security Profiles Operator (SPO) to deploy seccomp, SELinux, and AppArmor profiles to your clusters through SPO’s built-in support for OCI artifacts.

- For GitOps (maybe we call it ArtifactOps in this case), you can use Flux to pull your desired state from registries rather than git. Read and watch through the progress the Flux team has made so far in implementing OCI artifact support. They even have a cheatsheet.

- For Tekton, you can use OCI artifacts for Bundles and Chains.

- Control Falco rules with falcoctl on Linux and Kubernetes by distributing them through OCI artifacts.

🌍 And then there’s everything else…

- I’ve mentioned Homebrew as an early adopter of OCI artifacts for all your tools, serving through GitHub Container Registry at a rate of over 500 terabytes monthly. Here’s a breakdown of how their packages look in the manifest metadata.

- Store Dev Container Features (

devcontainer.json) as OCI artifacts. - Use IBMs package manager to package and deploy software to z/OS.

- Maybe someday, rpm's via OCI artifact.

- Check out the list of tools using ORAS. Here's an incomplete list of tools conforming to the Distribution spec.